The short answer is yes. As Matt Cutts goes on to explain below:

Net66 SEO: How to Apply Schrödinger to SEO

Today Google pays homage to Erwin Schrödinger on his 125th birthday. See the Doodle below:

The principle itself is quite complicated to understand but I’ll do my best to explain it. You start off with three items; a cat, a glass phial containing a lethal poison and small piece of radioactive material. These three items are put into a box with the glass phial set to detect radioactivity, and If the phial does detect radioactivity (which it surely will) then the glass will shatter, releasing the poison and killing the cat.

At this moment I’d like to explain that this is a thought experiment. No cats were harmed in the construction of this blog post.

As there is an air of inevitability about the poor cats demise you can then apply the Copenhagen interpretation of quantum mechanics (you’ll have to take me at my word for that) to state that the cat is both alive and dead at the same time. Alive because not enough time has passed for the phial to smash, and dead because the cat is inevitably doomed.

It’s meant to pose the question of when does quantum superposition (cat both alive and dead) end and when does reality kick in to determine the outcome of the cat’s fate i.e. peering into the box.

Interesting no doubt but how does this apply to SEO? Sit tight and I’ll tell you, we’ll just be making a few simple swaps.

Instead of a cat you have blog post you’ve written. Instead of a box you have someone else’s blog/email address. The radioactive material is instead you requesting to publish a blog, and the glass phial is the blog owner who will smash/publish a post when it detects a request and a blog post.

So, you find the blog you wish to publish on (the box) and put in your blog post (the cat), the author (the glass phial) and your request for the author to publish it (radioactive material).

So as it stands it is inevitable that your blog post will get published on the authors blog. But when you put them in the box together the author still needs to detect the request which will then trigger him to publish your article. So at the same time, again applying the Copenhagen interpretation of quantum mechanics, your blog post is simultaneously published and unpublished. The same as the cat being alive and dead.

The only way to then stop the quantum superposition of this is to then “peer in the box” by checking the blog.

Simple really. I think?

Blog Post by: Greg McVey

Net66 SEO: View manual actions in Webmaster Tools

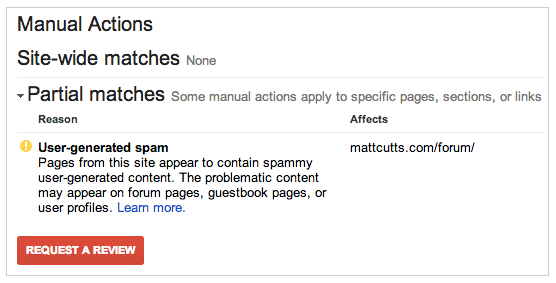

For a long time now there have been many issues with people believing that they have been penalised by Google and have received a Manual Action Penalty. This is when some one at Google takes the time to evaluate your site and then applies a penalty to the site manually.

In days gone by you were never officially alerted to this fact and were left wondering whether the site was down to algorithmic changes or Manual actions. But now Google, who have in the past promised to streamline and clarify their webspam actions, have added a feature in Webmaster Tools where you can see if your website has been the victim of a Manual Action penalty.

If you log in to your Webmaster account and navigate to where it says “Search Traffic” then you can see under that list is the option entitled “Manual Actions”. This is what you’d click to see if you have a penalty against you, most of the time though you’ll see “No manual webspam actions found” which is mainly due to the fact that only 2% of websites on the internet have been affected.

If you do find that you have a manual action against you, the new reporting tool gives you more of an inclination as to what the penalty is for and in some cases will give an example. In Matt Cutts Blog Post for the official Google Webmaster Blog he uses his own website as an example of the tools reporting prowess, you can see the screenshot below:

Once you’ve then dealt with whatever was causing the Manual Action Penalty then you can submit a reconsideration request from the same place. In doing this the whole process is streamlined and will lead to much better results in the search engine listings.

Blog Post by: Greg McVey

Google’s Link Scheme Guidelines: Press Releases should use nofollow links just like adverts

It was recently confirmed that Google have changed the webmaster guidelines when it comes to link schemes. The main rule they have concentrated on is the keyword rich anchor text within press releases and the links should include the nofollow attribute. Similar to that of paid advertisements, this ensures that paid links do not pass on any value to search engines.

The precise line in the updated link schemes guidelines is:

“Links with optimized anchor text in articles or press releases distributed on other sites.”

I researched more about this and have found a Google Hangout video by John Mueller who is the head webmaster trends analyst, it is clear that Google are turning to press releases as advertisements hence the change in link scheme. Press releases will still drive a lot of traffic to your website but take note of these new guidelines and instead of linking with your keyword use brand name, url or generic anchor text as these will still carry the follow attribute, therefore passing on PageRank.

Another interesting point is that in the past Matt Cutts has publicly came out and said that links within articles and press releases do not pass link juice, when many people who work in the SEO world know that it is not true in the slightest!

It seems to me that Google are taking a more direct approach about paid advertising and link schemes but also to the overuse of paid links within articles. Take a look at the Google Hangout video with John Mueller and let us know what you think.

[embedplusvideo height=”356″ width=”584″ editlink=”http://bit.ly/18MXfpn” standard=”http://www.youtube.com/v/z6p7vO04Vsc?fs=1″ vars=”ytid=z6p7vO04Vsc&width=584&height=356&start=&stop=&rs=w&hd=0&autoplay=0&react=1&chapters=¬es=” id=”ep6174″ /]

Blog Post by Jordan Whitehead

What can I do to help my SEO company get great results

A question often asked by website owners who employ the services of an SEO practitioner, and a it is a great question.

Before I answer this, let us consider what Google is looking for when it indexes a site.

In a very general way, it is looking for sites that have good traffic levels, low bounce rates , has good links from well ranked sites and plenty of good relevant text.

A lot of the work that needs doing to get a website ranked is technical and statistical and needs to be coordinated, so if you employ an SEO practitioner, let them do all that, that is what you are paying for.

Search Engines like to see relevant links to your site. So if you are a business talk to you suppliers and get them to put a link on their website linking to your website, if there is a trade association do the same. Often times you know and talk to other companies in the same field, get a link from them.

What you must not do, is go crazy about links, getting a link to your site from a Far Eastern gambling site is more likely to do you more harm then good, Unless you are a gambling website, and then it is counted as a relevant link and will be positive.

Use Social Media. There are a lot of businesses who cannot see the benefit, do not forget the old addage “There is no such thing as bad publicity” This is as true to day as ever.

Go on to Facebook, Google+, Twitter and as many of the social media sites you can find, and start pages for your company.

If you are a builder, put up images buildings you have built, before and after pictures, if you sell industrial widgets, tell the world just what these widgets do and what value they are, and include links back to your home site

This will show search engines that how far and wide your reputatation has spread, and give SEO points for it.

A note of caution. Google is not daft, far from it, so if overnight you create many many Social media , and many many blog posts, you will be rumbled and penalised

Neil McVey

Is Web design a factor in SEO

Like every other question that there is about SEO, it could be answered with the phrase “Well, yes and no.” Continue reading “Is Web design a factor in SEO”

Net66 SEO: Matt Cutts on Linking 20 Sites Together

First of all, 20 domains different sites on 20 different domains is quite a lot. Like, really a lot. So why would you want to link them all together? See Matt Cutts’ video response here:

Net66 SEO: Link Building Techniques to Avoid Post Penguin

Even Pre Penguin there was a large buzz on the internet about what will work, what wont work, what you should remove, what you should add. But now that the dust has (seemingly) settled, it’s becoming apparent that certain ways to build links are not only ineffective, but will actively hurt your site’s performance in the SERPs. Here’s some things NOT to do when you’re link building now:

Quantity over Quality

Sure, having a link profile with hundreds linking root domains might look good, but it’s not all that effective if the hundreds of domains that are on there are low quality sites with little relevance to your own. I’ve often said this and will continue to say it, relevance is key! I would take one relevant, good quality website than one hundred links from irrelevant, poor quality websites. A whole afternoon’s work can be classified as a success even if you get 1 good link. If your boss get’s on your case for so few links, educate them.

Ignoring Your Own Site

People get hung up on guest posting and will reserve the best content to give to other blogs as this increases the likelihood of the other blog accepting and publishing your content, providing you with a link from a relevant blog. But why? I know you get a great link from it, but what about the links the content attracts for the blog where it’s published. If the content is that good, it’ll be worth linking to and will benefit that blog. You’ll get some secondary link juice from this, but not a lot. So why not publish that content on your own site, and receive all the organic links to it yourself?

Anchor Text Angst

OCATD. Obsessive Compulsive Anchor Text Disorder. People would spend so much time searching for that one link they could get where they could insert their own anchor text and manipulate this to boost rankings. With the advent of Panda, this is no longer going to work. It’s going to work against you in some cases, especially if your Link Profile is skewed so much that it has over 50% keyword anchor text. Think about it, if people were organically linking to your site would they choose to link to you with a keyword? And if so, what are the chances that you have a whole bunch of people all miraculously choosing the same phrase. Strikes me, and now Google thanks to Penguin, as a little odd.

No News is Good News

People publishing monthly/bimonthly press releases are either gifted with an incredibly high amount of foresight, or they’re actually fabricating “News” to release. The bottom line with this one is, if you have no news to report, you have nothing to report. You shouldn’t seek out the tiniest little thing and publish a press release, Google will get wise to this as press releases are usually reserved for Product launches, Re-branding and other such large projects. Not the fact that you’ve added a new page to your website.

There are so many other habits that people are finding hard to kick but as it stands these top the pile on my most seen poor link building practice podium so far.

What are yours?

Blog Post by: Greg McVey

Disavow Tool can help with Penguin According to Matt Cutts

This has been one of the most talked about topics since the birth of Penguin 2.0 and that is, do we have to be afraid of the Disavow Tool or can it be trusted to recover penalised sites? Well Google’s own Matt Cutts (Head of the Web Spam team) came out and answered publicly on Twitter to a question, stating that the Disavow Tool can help with a Penguin impact.

This has been a big relief to many webmasters out there who have been impacted and can now safely use the tool without hesitating. The only problem is that we still do not know for sure how long it would take for a website to recover from a penalisation after using the disavow tool. My personal option would be to use the disavow tool to remove low quality and irrelevant links pointing to your website and then submit a reconsideration request to webmaster or selecting the fetch as Google option and pinging to get your website read as quick as possible.

If the disavow Tool works according to Matt Cutts then this cannot be ignored and using the tool effectively could be the difference between a successful recovery or a further penalisation by the panda update which is now a rolling update. It will also benefit your website in the long run, as Google could come out and roll out the Penguin update at any time and this time if you have removed the links which are harming your site in theory you shouldn’t be affected.

Although some people do not believe what Matt says most of the time, it is clear in what the message is he is trying to get across to webmasters which is; Google rewards high quality websites and penalises websites to be considered as spam. Hence why Google released this tool to help us recover and realise what we have done wrong.

Blog Post by Jordan Whitehead

Net66 News: Google Publishership Becoming a Factor in Rankings?

Last night, the SEO world was given a different look at how Publishership could affect websites rankings. Reported on several other SEO Blogs, travelstart.co.za began to display an authorship profile (see below). As you can see from my own blogs, tagging yourself as rel=”author” allows for your Google+ profile picture to be displayed in the Search Engine Rankings pages.

![]() Ok, so authorship in a blog isn’t an uncommon thing these things, but what was different about this page was that it contained no Google Authorship Markup. What else became apparent was that there was also no Publishership Markup in their either. So how is this website displaying the Google+ Profile Picture?

Ok, so authorship in a blog isn’t an uncommon thing these things, but what was different about this page was that it contained no Google Authorship Markup. What else became apparent was that there was also no Publishership Markup in their either. So how is this website displaying the Google+ Profile Picture?

It seem’s to be stemming from the Google+ page itself. Because you need to verify you own your website on your Google+ page first, Google could be drawing this information and using it to enhance their search results. Strange though that there is no Mark Up for Authorship or Publishership.

Apparently, shortly after getting noticed, Google pulled this feature from the search results and the website stopped displaying its Google+ profile picture in the results. So this was either a very strange bug in Google’s architecture, or this was Google testing something out.

What do you think?

Blog Post by: Greg McVey

Ranking on Google – So Whats Different?

Well as I’m sure you all know by now Google has yet again been making big algorithm changes to the way they rank the websites, or should I say YOUR website.

Well as I’m sure you all know by now Google has yet again been making big algorithm changes to the way they rank the websites, or should I say YOUR website.

The most recent being the penguin update. In short (risking over simplifying it) the manual checking, where people were hired to view websites, had finally come to an end. Google finally was able to take that information which they had gathered form this manual reviewers and put it into a computer program; now all websites can be automatically “critiqued” without a human eye even glancing over your page. I’m sure there are still some manual reviewers, but they have been cut down by a large amount, so once again the algorithm rules the roost!

So whats new?, how have these big penguin and panda processes now being put into the algorithm itself changed things?

Well if we go back 18 months to 2 years ago the internet was full of all kinds of rubbish, or spam you might call it. People were throwing out “Auto-blogs” which automatically “scraped” the internet looking for content which suited a keyword they were programmed to search for, then it gets posted automatically to their website, it worked very well for some people aswell!

However the panda update put an end to that. To sum up the goal of the panda it was created for the main purpose of cutting out any pages or posts which offered no value. This meant no duplicate content, no “thin” pages or websites. Basically, if what you was submitted wasn’t yours, or wasn’t benefiting the user then you’re “outta there!”.

There theres the Penguin update. Once again to sum up the objective of this algorithm change, Google went after backlinks. By now Im sure they are fully aware what natural looking backlink profiles look like. Im sure every niche and every market has its own little quirks, but Google probably has the information which all websites must adhere to.

If your not behaving in a proper manner in terms of how you market your website, this means collecting backlinks “genuinely, or if your not backlicking to any other websites with any weight or relevance, than you to risk being “slapped or penalised. Pengiun wanted ot make it harder for the average person to buy a backlinking tool and throw out hundres if not thoussands of backlinks to their website, (getting nearer the end of grey hat and black hat ways) and they have certainly succeeded!

The Algorithmic Conclusion

So you may think, game over! We can never rank for anything now, the bar has been raised to high!

You know what I say?? Good rid-dense! We have just come across a time in Googles existence where I see opportunity. Chances are the competitors who you would be competing against have either slipped down the rankings or have dissapeared completely. So to capitalise on this, all you really need to do is follow the rules. Don’t try and con Google, itll never work, write for the user and let Net66 worry about getting you ranked…That my friends will give you the best possible chance of succeeding whatever market your in.

Net66 News: New Algorithm Update “Over Multiple Weeks”

Google’s Matt Cutts has given us an update on the latest Algorithm Changes. In a tweet responding to an SEO company that had highlighted what appeared to be unnatural linking practices, Matt Cutts stated:

On the face of it that’s a pretty big update. But thinking even deeper, this algorithm has to be pretty big considering they’re having to roll this out over several weeks. This doesn’t really surprise me to be honest. There’s been a lot of talk in the SEO community about strange changes and fluctuations in Rankings and Traffic. One of the strangest claims to be rankings moving up in the SERPs but traffic reducing, which defies logic.

So what do you think these new updates are for? Is this the new Soft Panda algorithm update? Have they released another anti spam update?

Blog Post by: Greg McVey

Net66 News: Google Officially Changes Advice on how to Boost Rankings

For a while now Google’s official advice on how to improve your rankings has been stated as the following:

Ranking

However, these have changed recently to put the onus on creating a quality site and user experience, rather than building links. The new text has been edited to read:

Ranking

Sites’ positions in our search results are determined based on hundreds of factors designed to provide end-users with helpful, accurate search results. These factors are explained in more detail athttp://www.google.com/competition/howgooglesearchworks.html.

In general, webmasters can improve the rank of their sites by creating high-quality sites that users will want to use and share. For more information about improving your site’s visibility in the Google search results, we recommend visiting Webmaster Academy which outlines core concepts for maintaining a Google-friendly website.

They’ve also changed the link offering advice on how to maintain and improve your visibility in search engines from their Webmaster Guidelines to their new Webmaster Academy.

Change is a natural part of Google but what is different about this change is that Google kept it relatively quiet. Usually there’s a buzz around the latest updates from Google but this time it’s as if they have stolen in in the midst of the night and quickly changed a few words. The sly lot.

What do you think to the new changes?

Blog Post by: Greg McVey

The Evolution Of Google

If you were asked to describe to someone what Google actually does you would probably describe a search engine that we all know to be the most popular and overall, the best.

Many others have tried to copy Google over the years, and many have failed along the way. There are more than we could possibly mention but the likes of Lycos, AOL, Snap, Magellan may jog your memory.

The Latest example would be Bing (rumoured to be Because It’s Not Google). Even though Bill Gates may never publicly use those exact words, he would loved to have started Google; or even have half their market share!

So, what Has Google got that nobody else has?

What Google posses as an organisation is an understanding of its user. Google sets itself out to provide the best possible user experience. Most of Google’s competitors are aspiring to be Google. This gives Google a huge advantage because while most of its competitors are second guessing their methods, they are busy developing new products and diversifying their products and services.

Google is rumoured to be on the verge of overtaking Apple in the number of application downloads. That is now small feat as Apple has dominated that marketplace and has a very big and very loyal following.

As well as Google Play App Store we are seeing many more weird & wonderful developments from Google, not least the very controversial Google Glass.

So, do Google have competitors? You could probably make a case for both sides in all honesty because Google is so diverse in 2013 it really is hard to liken a company with the same ambition for development as Google.

The financial Times recently claimed Googles closest comparison would have been with General Electricity in the 19th Century as there domination was multifaceted and at a time where there was an age of electrification. You could argue that the age of computerisation was pretty much initiated by Microsoft, but are they in the same ball park as Google, I, and many others would think not!

The conclusion we can draw from this is Google are not to be underestimated. OK, so there is Google+, that has not yet covered Google in glory but how many times have you thought “that’ll never work” Exactly!

Is it feasible that in years to come we could all be walking around wearing Google Glasses and using the internet remotely via pair of Glasses! If Google think there is a chance we could then there is!

The great thing for us is we get to work with Google’s diverse product range on a daily basis and much like many clever will tell you:

“The more you learn, the less you know!”

Which could quite easily be the motto of Net66 because our team have a thirst for knowledge and a passion for new technologies and strategies. But are realistic enough to admit there is a lot we have to improve on.