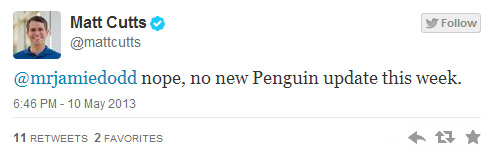

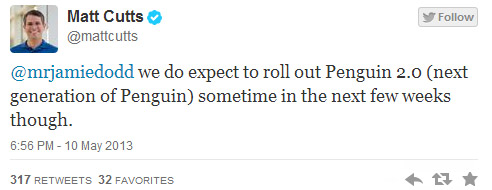

What a week so far. Is it really only Wednesday? We’ve had confirmation that Penguin is only a few weeks away from Matt Cutts himself. Prompting a raft of people asking about the best way to vet their previous, maybe not so squeaky clean, link building techniques. Naturally we have a lot of advice for people and are willing to help out, even offering a penguin guide on how you can help prepare for Penguin 2.0.

There was a brief respite when we all had a quick time out to play Atari classic Breakout, but now we have even more news from Google and that now great source of information Matt Cutts. Once again it seems like Google are tightening the noose on Link Networks. Their previous High profile tart was the SAPE Network that got penalised and vanished from the Search Engines. The knock on effect would have been bad for a lot of sites that would have lost the value from any links from that network. And obviously from losing that many links at once, their rankings would have dropped drastically.

This news has come by way of Matt Cutts himself once again. He’s posted a few tweets recently outlining his and Google’s desire to really punish sites that are benefitting from spam and they’re definitely cranking it up on the Link Networks. Look at the Tweets below to see what has been said:

So without specifying which Link network has been hit, Matt Cutts has inferred that Google have “took action on several thousand linksellers” which has to amount to a link network. I’ve still not heard anything as to which network has been hit, but I’m sure over the coming days all will be revealed.

Which Link Network do you think has been hit?

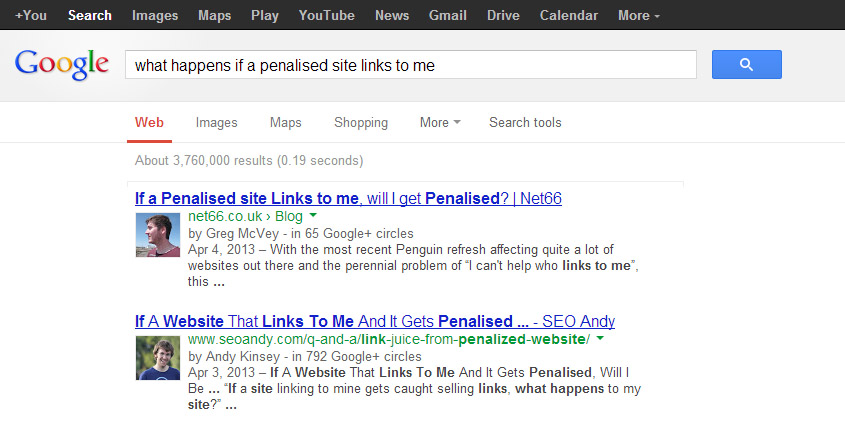

Blog Post by: Greg McVey.