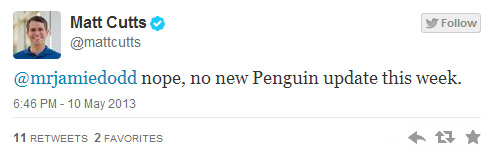

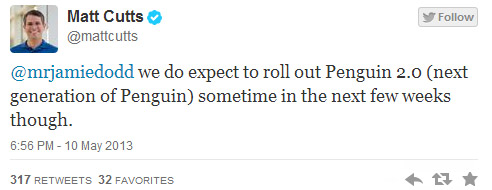

Recently I brought you news of Google saying that the new Penguin update was still a few weeks away. Less than two weeks later however they’ve rolled the new update out and confirmed it. PANIC! No, don’t. You should only be panicking if you or your SEO Company have been engaging in link practices that don’t abide by Google’s Webmaster guidelines.

Recently I brought you news of Google saying that the new Penguin update was still a few weeks away. Less than two weeks later however they’ve rolled the new update out and confirmed it. PANIC! No, don’t. You should only be panicking if you or your SEO Company have been engaging in link practices that don’t abide by Google’s Webmaster guidelines.

So why Penguin 2.0 and not Penguin 4? It’s being referred to as Penguin 2.0 as there have been changes made to the actual penguin algorithm, rather than a regular data refresh. Such an update in fact that Google have said around 2.3% of English queries will be affected and that “regular users” will notice a difference. I know 2.3% seems a bit minimal but with the amount of websites online in England at the minute, that figure has to be in the tens or hundreds of thousands of affected website out there.

There’s still the question of when there was an abnormal amount of fluctuations in rankings and traffic a few weeks ago. So what was that? Google themselves say it wasn’t an update to the Penguin algorithm, so could it have been a bit of a data refresh before the new Penguin Algorithm went live? Or was it just another Panda refresh that Google are no longer confirming? It’s unclear as Google haven’t commented on this.

The good news about this update is that Penguin is directly targeting spam and black hat results in search engines. This should lead to more accurate results in search engines with people who have engaged in previous black hat link campaigns dropping from results. So if someone has always used black hat techniques to rank higher than you and you have thought this unfair, look who has the last laugh!

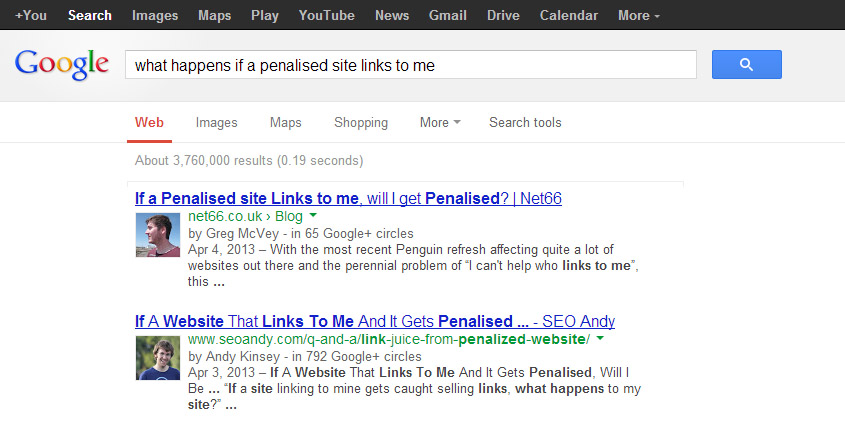

So, has your site been affected?

Blog Post by: Greg McVey