This is one of many challenging aspects in SEO and that is building a web page which is perfectly optimised not only for the algorithms but also for the user. The list is endless with this but I am going to show you what I think and what the people here at Net66 think is the best way to optimise a web page.

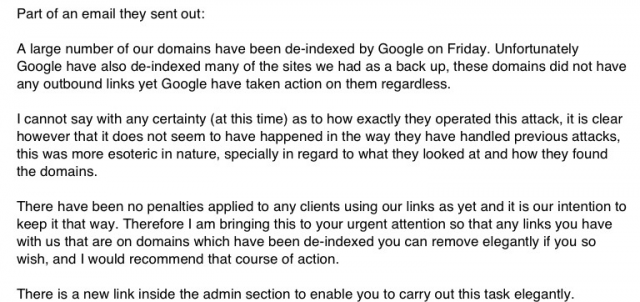

Gone are the days where we could solely rank of meta data and keyword stuffing, search engines have advanced over the years and it is now all about quality and relevance for the audience. There are also many ways of generating traffic to your website through social media, blogs and emails etc.

Crawling and Accessibility

This is crucial to check on your web page as this could impact the performance of your website. Search Engines read website through an automated bot which is therefore programmed to look for specifics. Some of these specifics include;

This is crucial to check on your web page as this could impact the performance of your website. Search Engines read website through an automated bot which is therefore programmed to look for specifics. Some of these specifics include;

• Is the page with the content on the correct Url?

• Is this Url user-friendly?

• Is the robots.txt file blocking the robots from crawling any pages?

• If the page is down then are you using the correct status code?

Now these are not all the specifics but to me these are the most important. So, what do they mean? Having a friendly URL structure ensures the bots can read your website more efficiently and therefore can only benefit you, it also makes it easier for the users to understand what the content is about. The robots.txt file is a set of commands in which you can control which pages can be crawled by robots.

It is recommended that you check this to make sure you are not blocking any pages you wish to be crawled. Lastly, sometimes we can experience technical issues with our website (if not you must be doing it wrong…) and when this happens it is important to use the correct status code.

If a page is down temporarily, then you must use a 503 status code plus if you need to redirect a page to a new address then you must use a 301 redirect which is permanent.

Content

This is the most important factor when it comes to successful SEO. The term ‘content is king’ is widely used by webmasters all through the industry and they are correct!

As the search engine algorithms have shifted and advanced over the past couple of years the two words which constantly arise are “quality” and “relevance”.

This is exactly what your content should be, quality and relevant to your niche. Now obviously we still have to abide by Googles Webmaster Guidelines with regards to uniqueness and keyword stuffing (you know the drill).

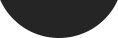

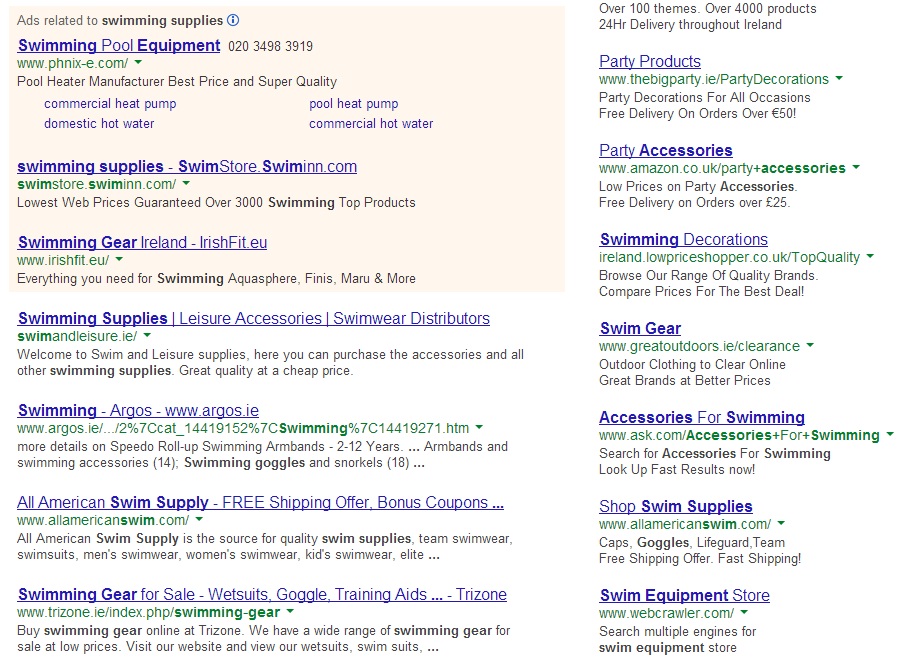

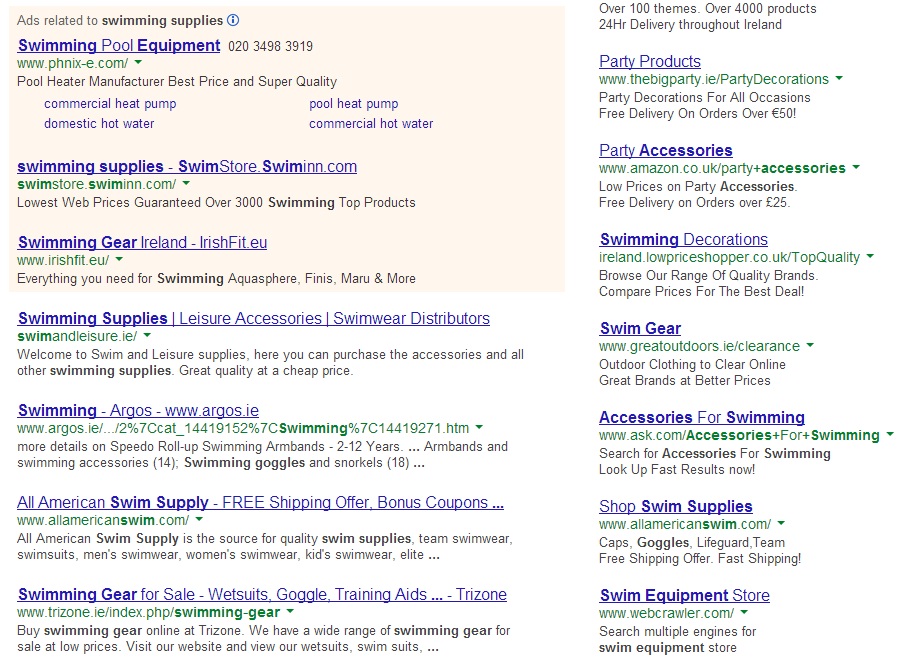

At the same you need to try and target a specific keyword without breaking the rules… But how do we do that? You do know that Google does not pick up that exact word 100% of the time. Google now picks up other relevant keywords. Here is a prime example;

As you can see Google highlights words relevant to swimming supplies, such as swimming goggles, swimming gear, swimming supply and equipment. So therefore, we can include these other relevant keywords in our body text, meta data, h1’s, h2’s and our alt tags on images.

This shows Google that you have done some research and it will reward you with that extra credibility. For ranking purposes the exact keyword you wish to rank for needs to be included in the meta title, then broken up in the description with a 2-3% keyword density in the body text.

Try and break up your content with images, bullet points, videos and short paragraphs. What this does is keeps the audience interested in the content and this is another factor which Google takes into consideration and that is user experience.

With regards to this and also content, Google seem to be rewarding more engaging content, so do not be shy and add your sense of humour into there (if you have one that is) and start engaging with as many people as possible.

Internal Links

When I first started out with SEO I used internal links for ranking purposes, linking to an internal page with exact keyword anchor text. What I then found out is that it didn’t look natural and there was always that risk of getting a telling off from Google.

I now use my internal links wisely, I create user friendly internal links which are linking to pages which deemed most valuable for a certain phrase/(s) and still some keyword anchor text linking to the page I want to rank.

I find that a good natural mix of anchor text is the way forward especially after Penguin 2.0. Also internal links create paths for the bots to crawl your website, the more paths the quicker your website will get read and indexed.

Blog Post by Jordan Whitehead